Although I had lots of excellent exposure to the world of AI in my previous co-op, this term I was able to really dive deep into the fun of researching, data wrangling, model implementation and training. I got to touch all parts of the system and push different models until they broke.

Replicant is a California-based company that is remote-first. The company develops Thinking Machines™ which are conversational bots that are designed to handle customer calls and messages through natural language. Gone are the days of “Press 1 for Road Side assistance; Press 2 for Profile updates.”

Conversation for these bots can be distilled into four steps: converting the audio into interpretable text, understanding the user, decision making, and converting the response back into human understandable audio.

Most of the machine learning that my team and I worked on was trying to understand the user by extracting their intents (goals or desired actions) and the detailed substance of their statement — ie names, dates, products, etc which are known as entities.

I worked on three unique projects over my term. Each of them touched on a new area of the product: Make, Model and Year extraction, multi-lingual intent recognition, and custom entity extraction.

For the first project, I had to fine-tune a RoBERTa language model to accurately extract the make, model and year of a car (SUV, RV, truck, etc…). A RoBERTa model is a large pre-trained BERT model which uses transformers to perform incredibly accurate named entity recognition (NER). Specifically, they are trained to extract entities like organizations, persons, locations, dates, and misc from a sentence. In order to train this model to work for my needs, I compiled and cleaned a large car dataset from multiple sources with the makes and models of many of the cars on the road. Then I created several sentence templates, filled those templates with the data and ran the training on these fake sentences. When deployed to production my model had a >99% f1 score.

The second project I worked on was more of a research investigation – a “SPIKE”. There was a big external push from the customers and potential customers for our bots to support other languages. So our models needed to be able to extract intents and entities regardless of the language. The SPIKE I was looking into was determining how to make DateTime and number extraction work in French and Spanish. This required me to read several papers and analyze our code (pre-processing, training and inference) to determine several paths that we could take. Then, I created an implementation plan for those options. Finally, I developed a testing framework/guidelines to compare all of the options. This involved speaking with the sales team and the operations team to determine key business requirements (real-time extraction, accurate) and convert them into measurements compatible with machine learning (low latency inference, f1 score over 95%).

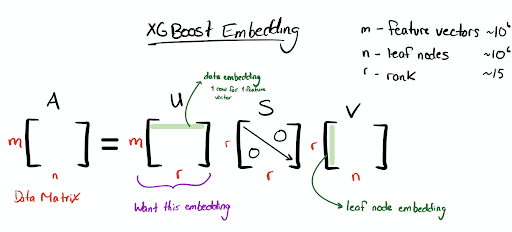

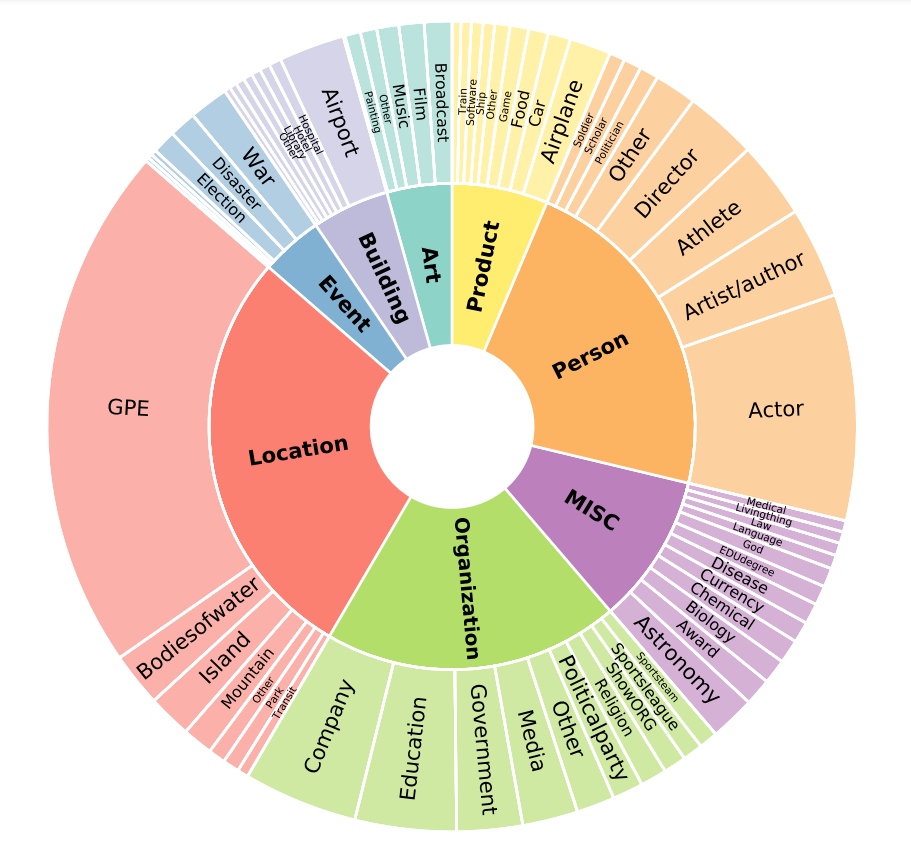

The final and most exciting project of my term was developing a model for custom entity extraction. The RoBERTa models that we use for other entity extraction tasks require large amounts of training data in order to achieve high accuracy. However, in several circumstances, there is little to no pre-existing data for the entities we are trying to extract. For example, if we are working with a health care company on sensitive information, there may not be any public data available for training. Or, in situations with industry-specific lingo or unique product types, the general models may not perform well enough. So in these cases, we need a way to extra these “custom” entities with little (Few-shot) or no (Zero-shot) training examples.

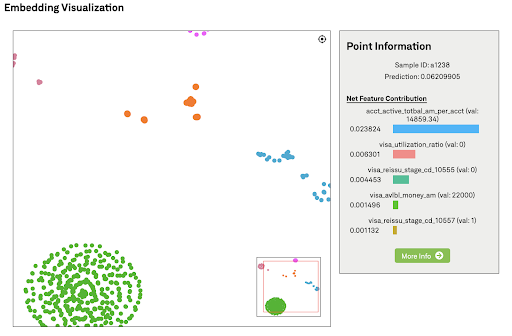

My job was to develop a model that Replicant could use for their own customers to create their own entities. Few-shot named entity recognition (FSNER) is a growing topic of research, however, it is new and not very collaborative; meaning there were many recent works, but they never used similar test sets, or improved on or compared themselves to other works. So my first step was to read, implement, and then compare several models that seemed promising. In order to compare the models for our specific needs, I curated two datasets to better measure what the models’ accuracy would be like in production. The first dataset was a merged dataset from all of the entity extraction models that were currently in use (make model, zip codes, pizza toppings, etc…). This would test how well the model could work on existing entities that we were trying to extract and would allow for a good benchmark to the models in production that are being trained on more data. The second dataset was a dataset specifically designed for few-shot training. Situations involving few-shot entity extraction are normally on really unique entity types so it is unrealistic to be training on entity types that are very broad (person, organization, etc…), and instead, it makes more sense to be training on more fine-grained types like (person-musician, misc-rivers, etc…). This dataset breaks those coarse-grained types into smaller types to better mimic the few-shot scenario.

The final step of this project was to use the knowledge I had learned and the models that I had tested to train a model for production. This involved doing extensive testing with different model types, configurations and tuning training parameters to ensure the highest f1 score. This training was all done on GPU machines on Azure. After the model was trained, I had to develop the interface to pre-process the data, run inference on the model, and post-process the response. And finally, I deployed it into production!